ChatGPT is the single fastest growing human application in the whole history. It had around 100 million users in just 2.5 months from its launch date (30th November 2022). To get the idea of these numbers, it took Instagram 2.5 years and WhatsApp 3.5 years to reach the same 100 million users.

ChatGPT stands for Generative Pre-trained Transformer model developed by OpenAI. It is called Generative because it is used to generate texts; Pre-trained because the model is already trained before its launch in the market; and Transformer is the revolutionary architecture of the model also called attention-transformers.

ChatGPT is a Large Language Model (LLM), a type of machine learning model that learns to generate texts based on large pre-trained dataset. In general, LLMs can perform variety of tasks, such as translation, summarization, code generation, corrections, conversations, and poems. They are based on transformers which is a type of neural network architecture. One of the big advantages of transformers is that they allow these models to be trained on large text datasets in parallel. In fact, models like BERT (by Google) also employ transformers for their language model. Therefore, to understand the ChatGPT model it is important to discuss more on transformers architecture in a separate section.

Why Transformers are so important?

Before transformers came around, we used Recurrent Neural Network (RNN) architecture for understanding texts and building a language model out of it. But, they never did well with handling of large sequences of text such as long passages. Also, they are bad at training large models as they face the issue of exploding and vanishing gradients during training (to read more on the issue, refer this blog). To solve this issue, we came up with LSTMs (Long Short-Term Memory) models. They can handle large sequences of texts. But transformer-based models have gained popularity because of their better handling of longer texts.

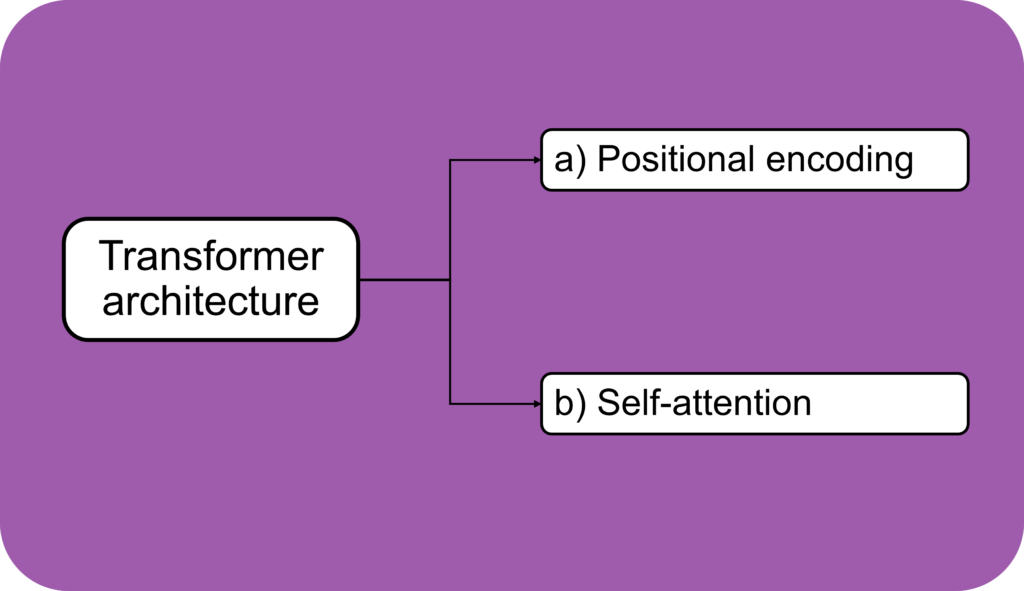

To understand it better, we discuss the two key innovations that transformers brought to the table of language models.

a) Positional encoding

It brings the idea of assigning a vector to the word in the sentence that encodes the positional order in the input data itself. This removes the requirement of inputting the words of a sentence in a ‘sequential’ manner (one-by-one), in order to train the network. This innovation has made it easier for transformers to train huge data with parallel computing and hence make the training process much faster, when compared with RNNs or LSTMs which need sequential data for training. To read more on this, refer here.

b) Self-attention

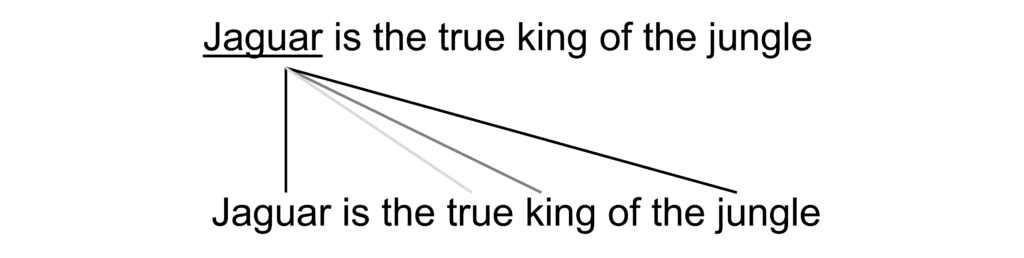

Attention is the mechanism that allows the transformer models to attend to different parts of the input sequences to get the context out of the sentence. Consider below two example sentences :

1) This model from jaguar has two doors

2) Jaguar is the true king of the jungle

In the above sentences, the word ‘jaguar’ has two different meanings. In the traditional neural network, each word is processed independently, so the model might struggle to capture the context of the word in the sentence.

However, with self attention, model learns a vector for each word in the sentence. The learned vector signifies the importance of the other words in relation to the word that is queried. So, in the above sentence, the model may learn following weight vector for the word ‘Jaguar’.

The above vector allows the model to get the context of the word jaguar. This means, based on the attention on specific words like ‘jungle’ and ‘king’, the model decides that ‘jaguar’ here refers to an animal and not the car brand. To read more on self-attention mechanism, refer here.

How ChatGPT works?

Now, once we have a brief idea about the architecture of the ChatGPT, the next question is how the model answers almost anything that the user queries.

ChatGPT is a Large Language Model (LLM) that is trained on over 570GB of text data from the sources that include the texts from the internet, digitized books, wikipedia and more. That is more than any human can ever read in a single lifetime. After training, the model learnt about 175 billion weight parameters of the neural network architecture. And that’s huge!! Once trained, all that ChatGPT does is that it predicts the next word in the prompt one-by-one. Though it does it extremely well.

The next big challenge with the AI is the alignment of its answers to the human values. To consider an example, AI should not reflect any kind of racism in its answers. According to OpenAI, it should reflect truthfulness, helpfulness and harmlessness. ChatGPT solves this problem with the method of Reinforcement learning through human feedback. For this, OpenAI hired 40 contractors to rate the responses of ChatGPT during the training process. These responses were then used to create another model that rewarded ChatGPT for responding with the aligned text.

The whole trained model along with the interface gave rise to ChatGPT that is widely used today. But when did it evolve to such stage?

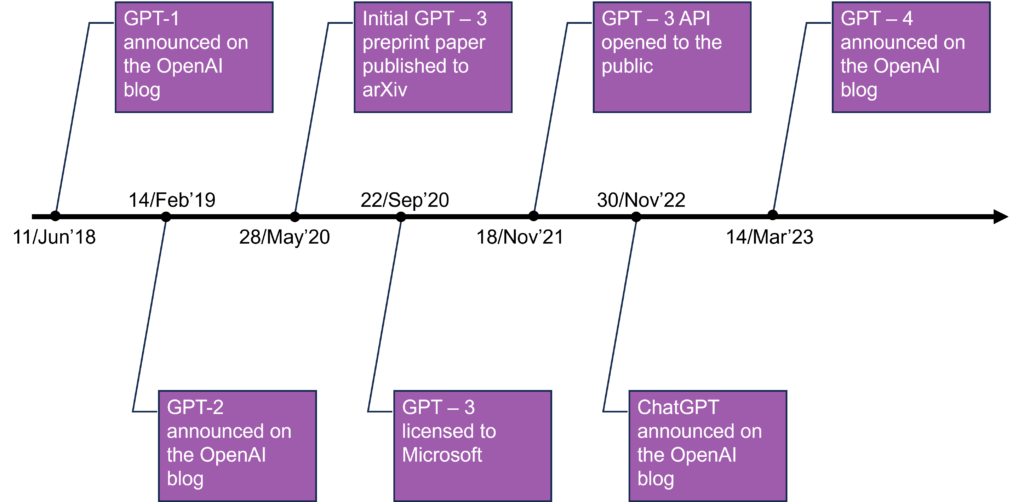

ChatGPT - Timeline

Before ChatGPT was announced, it took a few iterations to improve itself. However, if you look at the timeline, it didn’t take much time to sprung up to the state where it has reached today !

On the closing note I would like to add that although ChatGPT can generate human like responses to any number of questions asked, it does not know anything about the human world. And it should not be relied on for anything extremely important. It means that when the user does not ask any question to the tool, there is nothing going on in the mind of ChatGPT. It is just an empty space. There is no experience being generated about the human world. As already said, it just predicts the next word of the prompt only after the input is fed by the user as a question.

That means, even though the technology behind the tool is really remarkable in the sense of what it can do, we should not overestimate the capabilities by ignoring the limitations of such AI tools and of any such tools that will be developed in future.

Further, this blog is a gentle introduction to ChatGPT and the technology used behind the tool development. This can be a good starting point to dive deep into the concepts that are being currently employed in the model. I would appreciate readers to refer the provided links to gain a better understanding of them.

On the ending note, here are some fun facts about ChatGPT 🙂

Fun Facts

- ChatGPT generated 1.6 billion visits in June 2023

- OpenAI runs 700,000$ every day to run ChatGPT

- Seven nations including China and Russia have restricted access to ChatGPT

- The highest percentage of users belong to USA (15.2%), followed by India (6.3%), Japan (4.0%) and Canada (2.7%)

- ChatGPT supports more than 95 languages

Hope you learnt something new today from this blog.

2 Responses

Somebody essentially help to make significantly articles Id state This is the first time I frequented your web page and up to now I surprised with the research you made to make this actual post incredible Fantastic job

Thank you!